Kubernetes Node 污点的使用

Mr.Lee 2025-01-02 17:29:23 DockerKubernetes

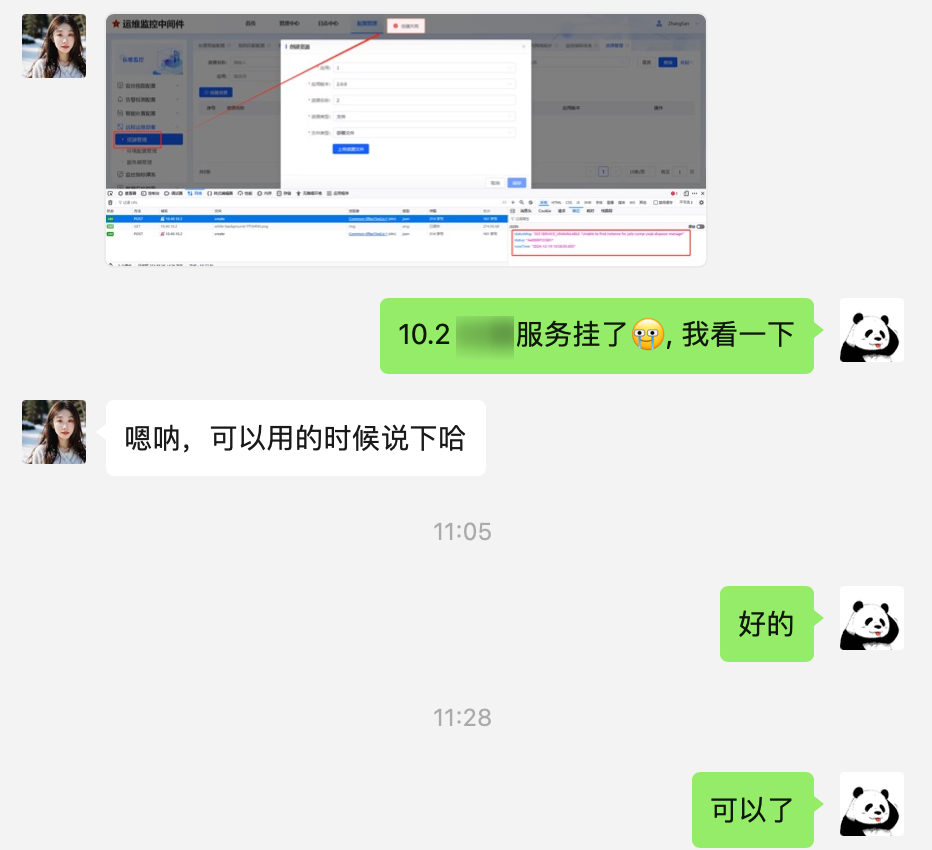

现在测试环境中的 Kubernetes 集群, 有 10.2, 10.8 两台机器

# 前情提要

为了统一镜像源. 就引入了 Docker Registry , 并修改镜像拉取策略为IfNotPresent . 但是 10.8 机器始终拉取不到 Docker Registry 中的镜像. 就导致调度到 10.8 的服务部署失败. 为了不影响服务的正常访问, 就要给 10.8 加个污点, 不让服务调度到这台机器.

kubectl taint node 10.40.10.8 key=value:NoSchedule

1

# 开始今天的记录

因为 10.2 资源耗尽了, 新部署的服务一直处在 Pinging 状态.

临时的解决方案是, 镜像拉取模式改为: Always , 清掉 10.8 的污点, 让服务调度到这台机器.

# 删除 10.8 的污点

❯ kubectl taint nodes 10.40.10.8 key-

# 查看 10.8 机器的详情

❯ kubectl describe node 10.40.10.8

Name: 10.40.10.8

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/instance-type=k3s

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=10.40.10.8

kubernetes.io/os=linux

node.kubernetes.io/instance-type=k3s

Annotations: alpha.kubernetes.io/provided-node-ip: 10.40.10.8

flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"3a:e6:a8:04:80:e9"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 10.40.10.8

k3s.io/hostname: 10.40.10.8

k3s.io/internal-ip: 10.40.10.8

k3s.io/node-args: ["agent","--data-dir","/var/lib/rancher/k3s","--server","https://10.40.10.2:6443","--token","********"]

k3s.io/node-config-hash: M43OC3ONSHZXLZ2IBB6U4SCP5SRIIYFE42JRQ5QYNM3KRKHSMUYQ====

k3s.io/node-env: {"K3S_DATA_DIR":"/var/lib/rancher/k3s/data/13f9723ffde84ba41d08658d407a523bcf32698f179c9ab30cc0534e1e5d2c1a"}

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Thu, 12 Dec 2024 15:35:19 +0800

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: 10.40.10.8

AcquireTime: <unset>

RenewTime: Thu, 19 Dec 2024 17:43:26 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Thu, 19 Dec 2024 17:41:26 +0800 Mon, 16 Dec 2024 04:49:59 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Thu, 19 Dec 2024 17:41:26 +0800 Mon, 16 Dec 2024 04:49:59 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Thu, 19 Dec 2024 17:41:26 +0800 Mon, 16 Dec 2024 04:49:59 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Thu, 19 Dec 2024 17:41:26 +0800 Tue, 17 Dec 2024 13:07:57 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 10.40.10.8

Hostname: 10.40.10.8

Capacity:

cpu: 8

ephemeral-storage: 506210820Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 31596900Ki

pods: 110

Allocatable:

cpu: 8

ephemeral-storage: 492441885310

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 31596900Ki

pods: 110

System Info:

Machine ID: e40653e9f03a4b6993154fb8bb7d4c99

System UUID: e40653e9f03a4b6993154fb8bb7d4c99

Boot ID: 4985cddb-f229-4215-b5ec-87254745df1c

Kernel Version: 4.19.90-24.4.v2101.ky10.x86_64

OS Image: Kylin Linux Advanced Server V10 (Sword)

Operating System: linux

Architecture: amd64

Container Runtime Version: containerd://1.7.11-k3s2

Kubelet Version: v1.28.6+k3s2

Kube-Proxy Version: v1.28.6+k3s2

PodCIDR: 10.42.3.0/24

PodCIDRs: 10.42.3.0/24

ProviderID: k3s://10.40.10.8

Non-terminated Pods: (9 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system svclb-traefik-7fdb195f-mh78c 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7d2h

monitoring kube-prometheus-node-exporter-nnjzs 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7d2h

monitoring grafana-loki-promtail-7zcb9 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7d2h

omm omm-ui-86cf74b685-xbtjq 0 (0%) 0 (0%) 0 (0%) 0 (0%) 94m

omm omm-gateway-67768684bb-2zw7s 0 (0%) 0 (0%) 0 (0%) 0 (0%) 94m

omm omm-monitor-view-7f7b855cd6-6h746 0 (0%) 0 (0%) 0 (0%) 0 (0%) 94m

omm omm-indicator-data-6ddfdcf8d8-8blhh 0 (0%) 0 (0%) 0 (0%) 0 (0%) 94m

omm omm-alert-manage-6fdbfb964b-lqqkp 0 (0%) 0 (0%) 0 (0%) 0 (0%) 94m

omm omm-dispose-manage-5df847fc45-44vvw 0 (0%) 0 (0%) 0 (0%) 0 (0%) 94m

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 0 (0%) 0 (0%)

memory 0 (0%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events: <none>

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

可以看到, omm 下的所有服务, 都已经调度到了 10.8

回头

Docker Registry这个坑踩过去之后, 会再记录...